Meta has resumed its facial recognition software testing on Facebook. Three years after discontinuing it due to privacy concerns and regulatory pressure, the social media giant announced on Tuesday that it is testing the technology again to tackle “celeb bait” scams.

Meta will enrol around 50,000 public figures in a trial, using their profile photos to compare with images in suspected scam ads automatically. If a match is found, and Meta determines the ads are scams, they will be blocked.

The celebrities will be informed of their participation and can opt-out if they choose. The trial will be rolled out globally from December, excluding regions where Meta lacks regulatory approval, such as the UK, EU, South Korea, and U.S. states like Texas and Illinois.

Monika Bickert, Meta’s vice president of content policy, explained that the focus is on protecting public figures whose likenesses have been exploited in scam ads.

“We want to provide as much protection as possible, but they can opt-out if they wish,” Bickert said.

This trial highlights Meta’s attempt to balance using potentially intrusive technology to combat rising scam numbers while addressing ongoing concerns about user data privacy. Any facial data collected during the trial will be deleted immediately, whether or not a scam is detected.

Meta stated that the tool underwent thorough internal privacy and risk reviews, and was discussed with regulators and privacy experts before testing began.

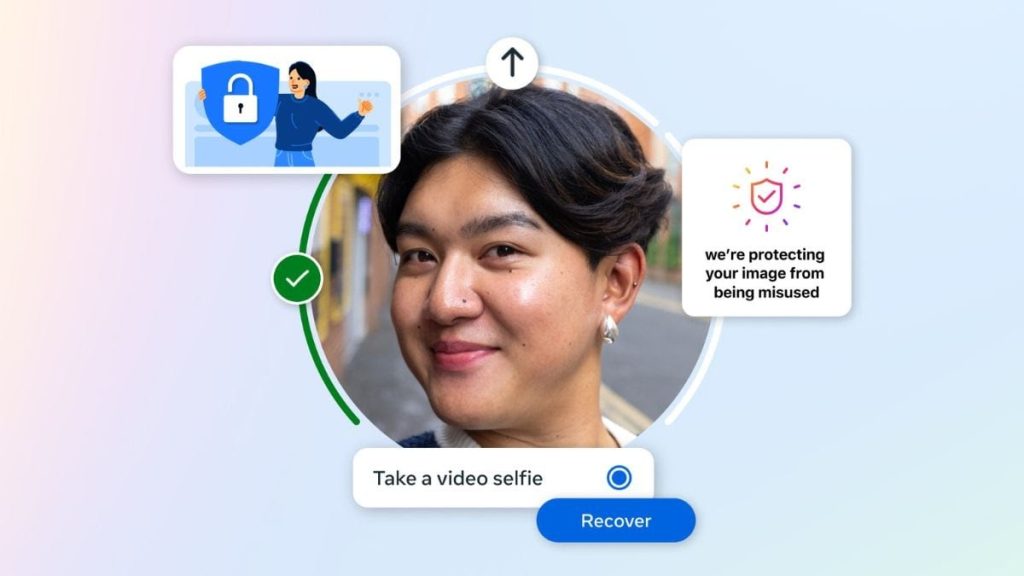

The company is also exploring the use of facial recognition to help non-celebrity Facebook and Instagram users regain access to accounts compromised by hackers or locked due to forgotten passwords.